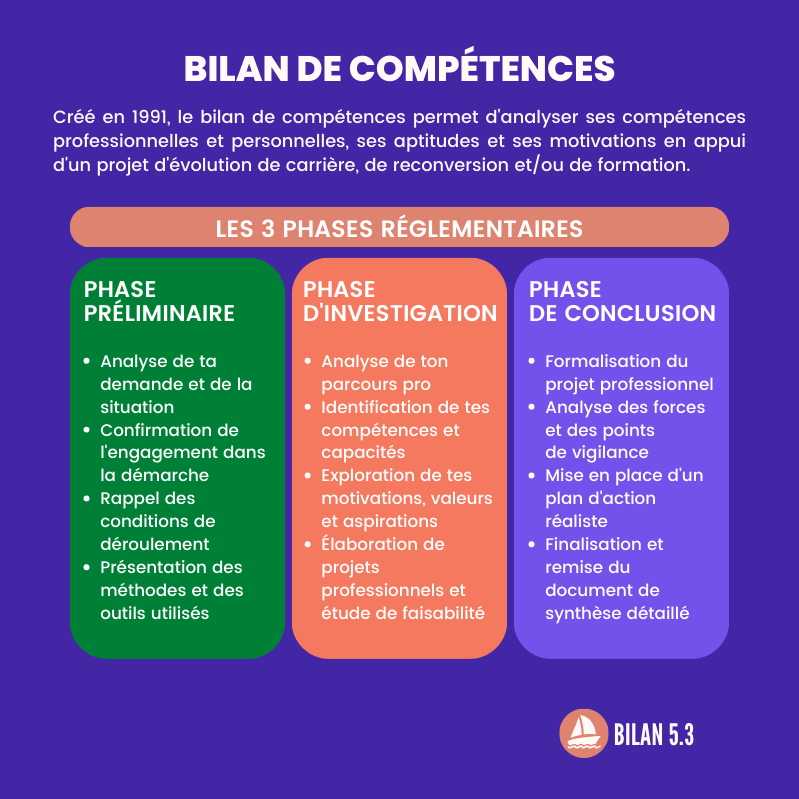

American researchers have developed a machine capable of differentiating between a depressing image and a happy one in just fractions of a second.

Every passing day, the machine becomes as intelligent as the human, if not more. Now, thanks to American researchers, a computer could tell the difference between a depressing image and a happy one in just fractions of a second. The results of their study were published on Wednesday July 24 in the newspaper Science Advances.

“Learning systems become very good at recognizing the content of images, at understanding what kind of subject it is,” explains Tog Wager, the lead author of the study, a researcher at theBoulder University in Colorado (United States), as a preamble. “We wondered: could they do the same with emotions? The answer is yes.”

Building on previous work capable of associating stereotyped emotional responses to images, the researchers developed a machine to predict how a person would feel when seeing a particular image. Then they showed a system called EmoNet 25,000 different images and asked it to classify them into 20 categories such as need, sexual desire, horror, fear or even surprise.

Eleven types of emotions identified with precision

Result of the races: the machine succeeded in categorizing with precision (95% of correct answers) eleven types of emotions. Even more impressive: a simple color was enough for him at EmoNet to predict a reaction. She thus associated black with anxiety or red with envy. Finally, when researchers showed her brief clips from movies, asking her to classify them as romantic comedy, action movie or horror movie, she answered correctly three-quarters of the time.

To fine-tune the system, the researchers brought in 18 human subjects. As an MRI measured their brain activity, they were shown 112 images in four-second flashes.

By comparing computer activity to that of participants, the researchers “found a match between patterns of brain activity in the occipital lobe and units in EmoNet that register specific emotions. This means that EmoNet learned to represent emotions in a biologically plausible way, although we haven’t specifically trained it to do so.”

“Our brains recognize emotions very early”

By observing the brain x-rays of the participants, the researchers also noticed that a basic image was enough to trigger an emotion linked to an activity in the visual cortex of the brain. “Our brains recognize them (emotions, editor’s note), categorize them and respond to them very early”, enthuses Tog Wager. So what we see, even briefly, might have a bigger impact on our emotions than we might expect.

“Many people imagine that humans evaluate their environment in a certain way and that emotions stem from older brain systems, like the limbic system,” adds Philip Kragel, co-author of the study, research associate postdoctoral fellow at the Institute of Cognitive Sciences. “We found that the visual cortex itself also plays an important role in how emotion is processed and perceived,” he said.

So, eventually, systems like EmoNet could be used to help people digitally filter out negative images or find positive ones. They could also help improve computer-human interactions and advance research on emotions, the researchers say. And to conclude: “What you see can make a big difference in your emotional life”.

.