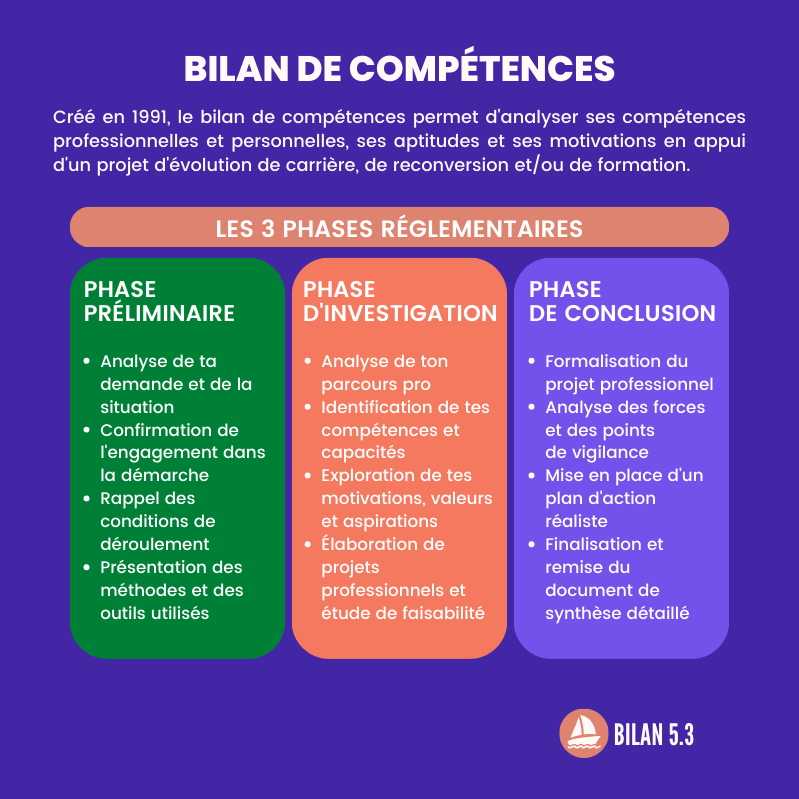

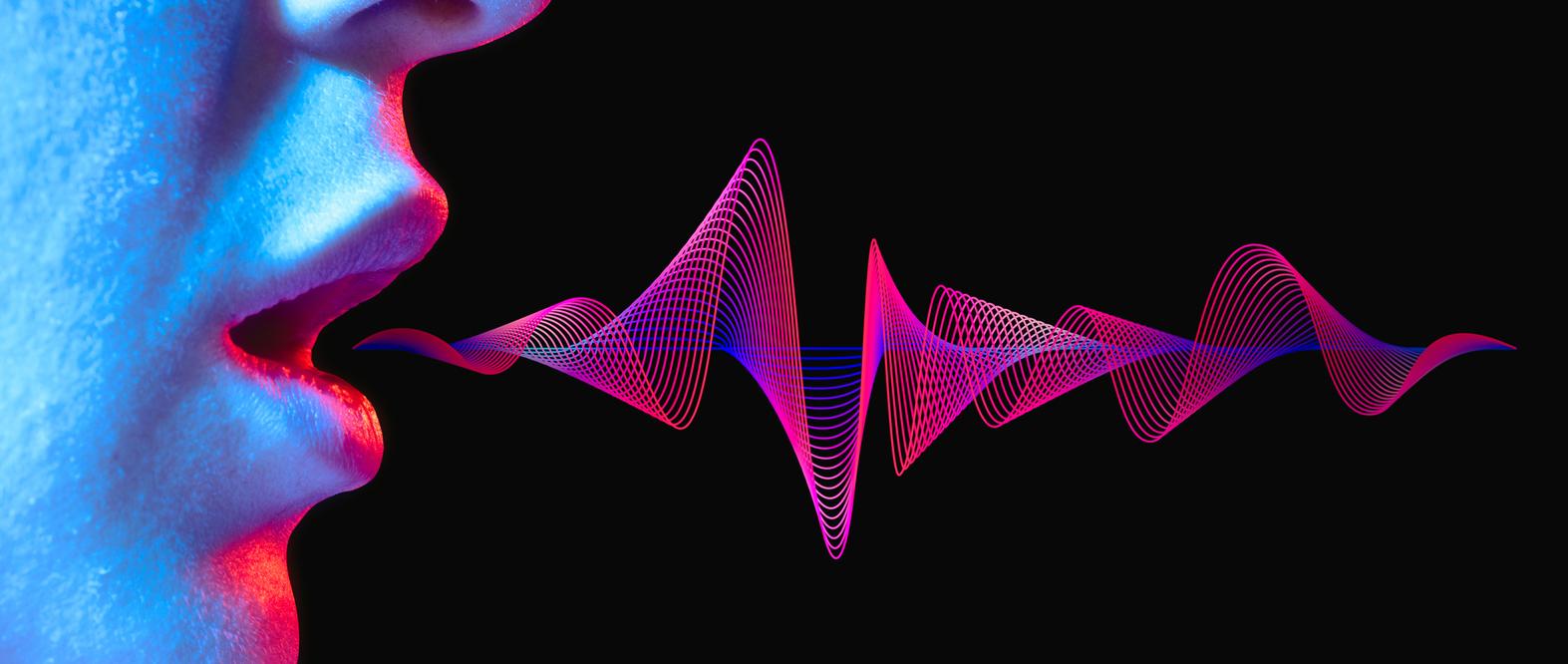

Researchers have succeeded in reconstructing the speech of a person speaking only by moving their mouth, without vocalizing.

Give voice to the dumb? This hope may soon become reality, according to the results of a study recently published in the journal PLOS Computational Biology. French researchers from CNRS and Inserm have designed a voice synthesizer that can be controlled in real time, using only articulatory movements. This synthesizer is capable of reconstructing the speech of a person articulating “silently”, that is to say moving his tongue, his lips, his jaw, but not vocalizing.

Synthesis voice

For several years now, researchers from the CRISSP team and the BrainTech Laboratory have been working on a system capable of capturing a set of physiological signals linked to this “silent articulation”, and converting them in real time into a synthetic voice.

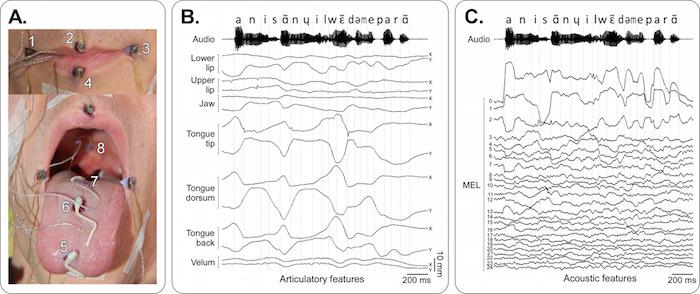

To do this, they had to develop a machine learning algorithm, used to decode these articulatory movements using sensors placed on the tongue, lips and jaw, and convert them in real time into speech of synthesis.

Plos / Bocquelet et al./CNRS

Brain-machine interface

The algorithm, which has already been the subject of several publications, is now ready. In their latest work, the researchers explain that they have achieved a high degree of use: the controllable synthesizer can be used “a priori by any speaker (after a short period of system calibration). In addition, there is no longer any restriction on the vocabulary, which is classically the case in automatic lip reading systems ”.

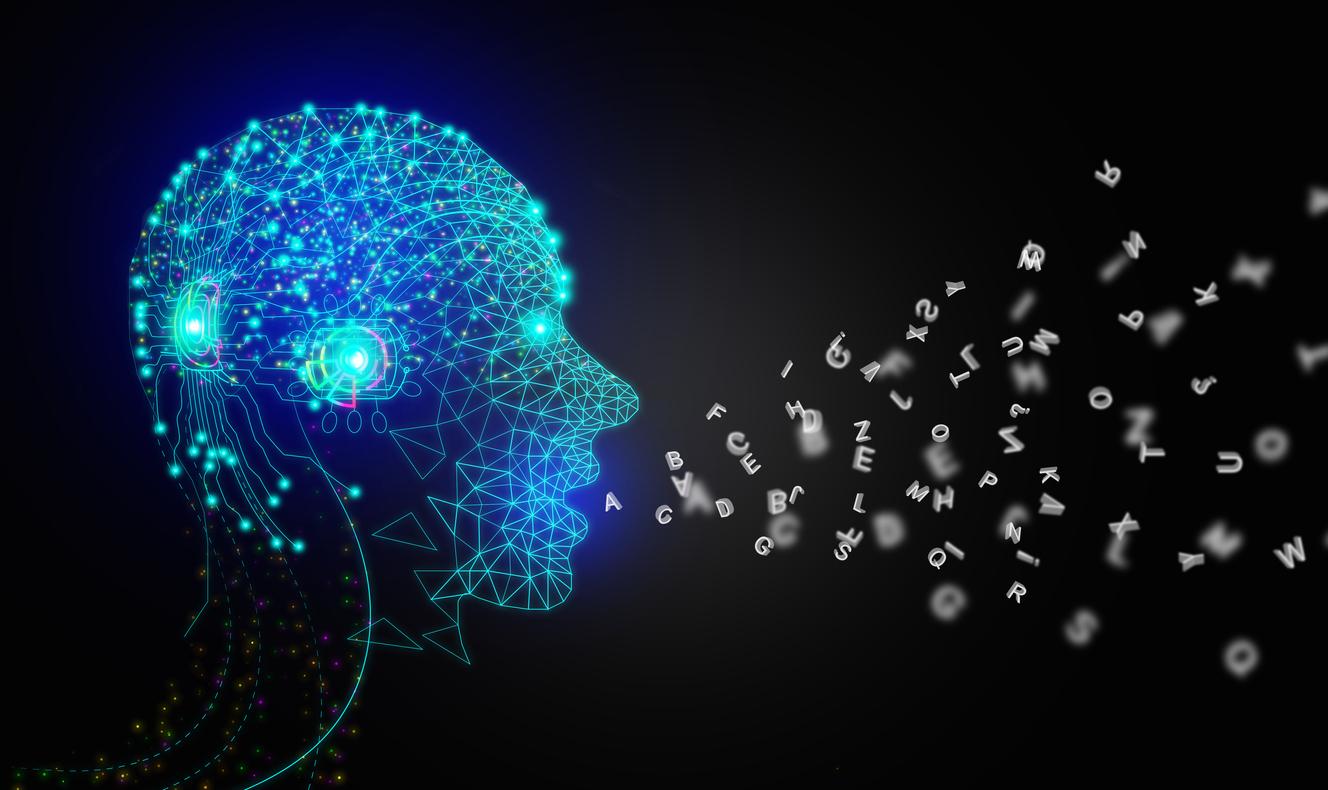

“These new results are a necessary step towards an even more ambitious goal”, specify the authors. Researchers are currently working on a brain-machine interface for speech restoration whose ultimate goal is to reconstruct speech in real time, but this time from brain activity.

.