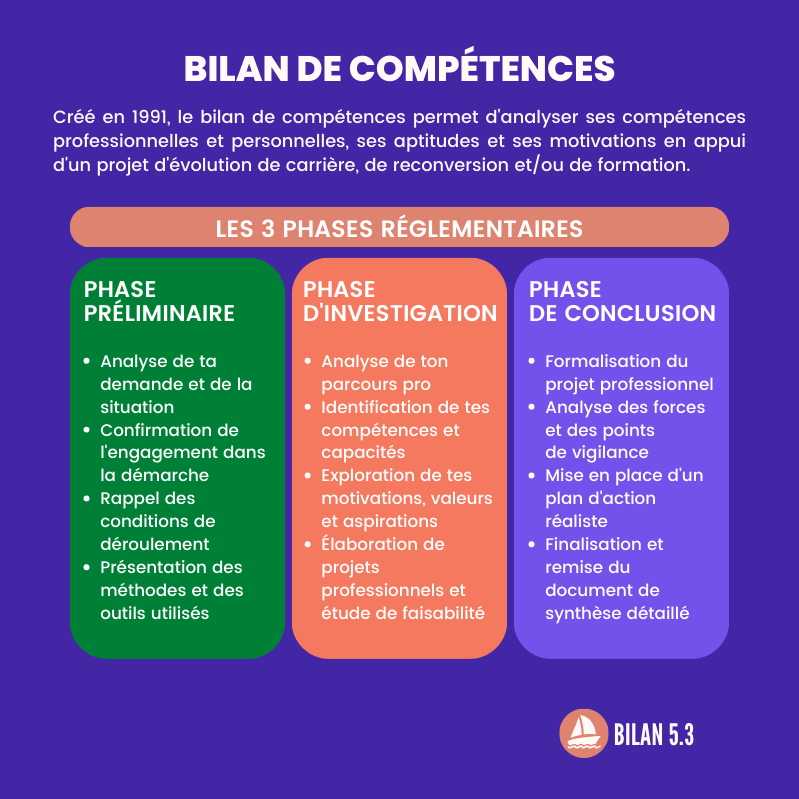

Contrary to a long-held theory, the brain processes sounds and speech simultaneously.

- The two regions of the brain that process sound and speech, the auditory cortex and the superior temporal gyrus, begin to process acoustic information at the same time.

- These findings could lead to new ways to treat conditions such as dyslexia, where children have difficulty identifying speech sounds.

How the brain processes language sounds may have been misunderstood. For many years, it was accepted that it first deals with acoustic information before transforming it into linguistic information. A new study, published on August 18 in the journal Cellchallenges this theory and suggests that the brain processes sounds and speech simultaneously.

An old theory that lacks evidence

Speech sounds, when they reach the ears, are converted into electrical signals by the cochlea and sent to a region of the brain called the auditory cortex on the temporal lobe. For decades, scientists thought that speech processing in the auditory cortex follows a serial pathway, similar to an assembly line in a factory. It was thought that first, the primary auditory cortex processes simple acoustic information, such as the frequencies of sounds. Then, an adjacent region, called the superior temporal gyrus (STG), extracts more important speech features, like consonants and vowels, turning sounds into meaningful words.

Direct evidence for this pattern is lacking because it is difficult to obtain, and this is what has alerted researchers to the idea that another mechanism may exist. “So we embarked on this study, hoping to find evidence for this – the transformation of the low-level representation of sounds into a high-level representation of words”, says Edward Chang, neuroscientist and neurosurgeon at the University of California, San Francisco, and lead author of the study.

A pioneering study

For seven years, the researchers studied nine participants who had to undergo brain surgeries for medical reasons, such as the removal of a tumor or the localization of an epileptic focus. For these procedures, arrays of small electrodes were placed to cover their entire auditory cortex to collect neural signals for language and seizure mapping. Participants also volunteered to have the recordings analyzed to understand how the auditory cortex processes speech sounds.

“This is the first time that we can cover all these areas simultaneously directly from the surface of the brain and study the transformation of sounds into words.”, rejoiced Edward Chang. Previous attempts to study activities in the area largely involved inserting a wire into the area, which could only reveal signals at a limited number of points.

Simultaneous work

When speaking short sentences to participants, the researchers expected to find a flow of information from the primary auditory cortex to the adjacent STG, as the traditional model asserts. If this is the case, the two zones activate one after the other. To their surprise, the scientists found that certain areas in the STG responded as quickly as the primary auditory cortex when sentences were spoken, suggesting that both areas started processing acoustic information at the same time.

Additionally, as part of clinical language mapping, the researchers stimulated the participants’ primary auditory cortex with small electrical currents. If speech processing follows a serial pathway as the traditional model suggests, stimuli would likely distort patients’ speech perception. On the contrary, while the participants experienced stimuli-induced auditory sound hallucinations, they were still able to clearly hear and repeat the words spoken to them. However, when the STG was stimulated, participants reported that they could hear people talking, but could not distinguish words.

Better treat dyslexia

These findings offer new insight into how our brains work and could lead to new ways of treating conditions such as dyslexia, where children have difficulty identifying speech sounds. “Although this is an important step forward, we do not yet fully understand this parallel auditory system.tempers Edward Chang. The results suggest that the conveyance of sound information could be very different from what we had imagined. It certainly raises more questions than it answers..”

.