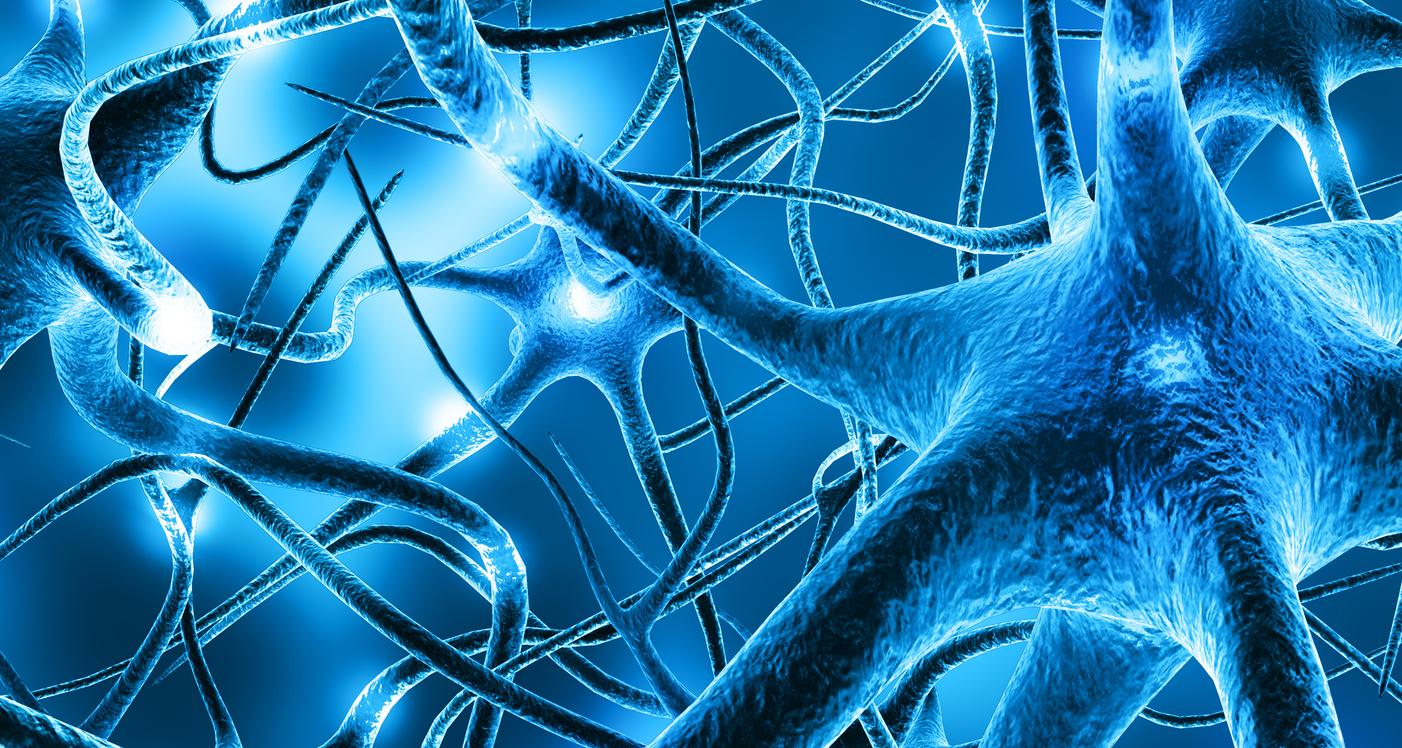

Synapses, neuronal connections, allow us to memorize. Researchers have managed to quantify the volume of stored information.

- Synapses are spaces between neurons that allow the transmission of information.

- They would have a storage capacity ten times greater than previously imagined.

- Researchers made this discovery using new computational tools on the synapses of rats.

Our brain is stronger than we imagined. In the specialized publication Neural Computation, scientists from the Salk Institute in the United States, explain that they have discovered that neuronal connections are capable of storing ten times more information than they previously thought. “When a message passes through the brain, it passes from one end of a neuron, called a dendrite, to anotherexplain the authors. Each dendrite is covered in tiny bulbous appendages, called dendritic spines, and at the end of each dendritic spine is the synapse, a small space where the two cells meet and an electrochemical signal is transmitted.” These synapses are activated to send different messages between neurons.

How do synapses impact our memory?

Using computational techniques, American scientists have created a tool to measure synaptic strength, plasticity accuracy and the amount of information storage. “Quantifying these three synaptic features can improve scientific understanding of how humans learn and remember, as well as how these processes change over time or deteriorate with age or disease.”indicate the authors.

Significant storage capacities in synapses

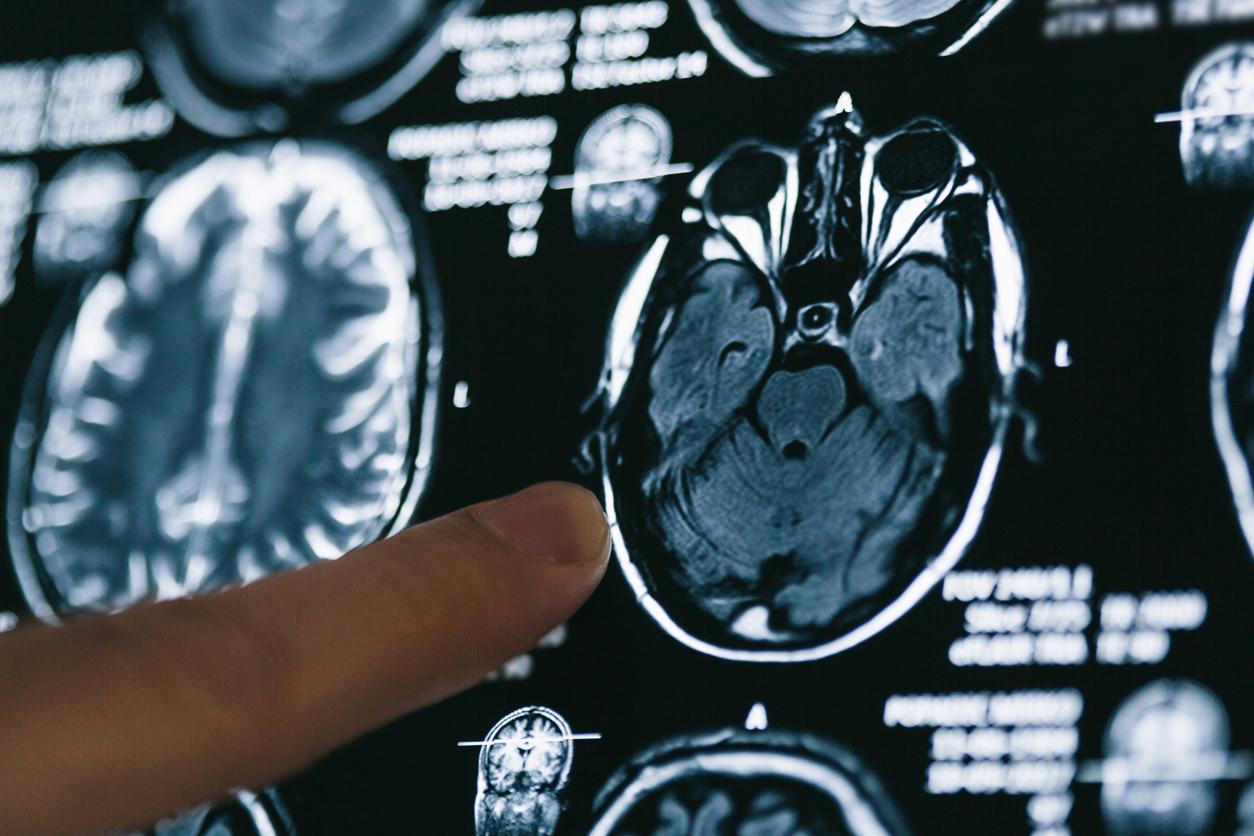

In particular, they applied the concepts of information theory, a mathematical tool for understanding information processing. They used it on pairs of synapses in a rat hippocampus, a part of the brain involved in learning and memory, to determine these three parameters. “We divided synapses by strength, of which there were 24 possible categories, and then compared pairs of synapses to determine how precisely the strength of each synapse is modulated.”, develops Mohammad Samavat, lead author of the study. They noticed similarities in the strength of different synapses,”which means the brain is very precise in weakening or strengthening synapses over time.”indicates the researcher.

At the same time, they measured the quantity of information contained in the different categories. “Each of the 24 synaptic strength categories contained a similar amount (between 4.1 and 4.6 bits) of informationthey conclude. Compared to older techniques, this new approach using information theory is more in-depth, accounting for 10 times more information storage in the brain than previously thought.”

A promising technique for studying the brain

For scientists, this new tool for measuring force and plasticity could be used more widely. “This technique will be of considerable help to neuroscientistsestimates Kristen Harris, professor at the University of Texas at Austin and co-author of the study. It could really propel research into learning and memory, and we can use it to explore these processes in all the different parts of the human brain, the animal brain, the young brain, and the aged brain..” It could notably be used in research against Alzheimer’s disease.