In the world of artificial intelligence, one of the most fascinating and powerful ideas is the neural network. Neural networks are the foundation of many modern AI systems — from recognizing faces in photos to generating human-like text. But what exactly are they, and how do they “learn”?

What is a neural network?

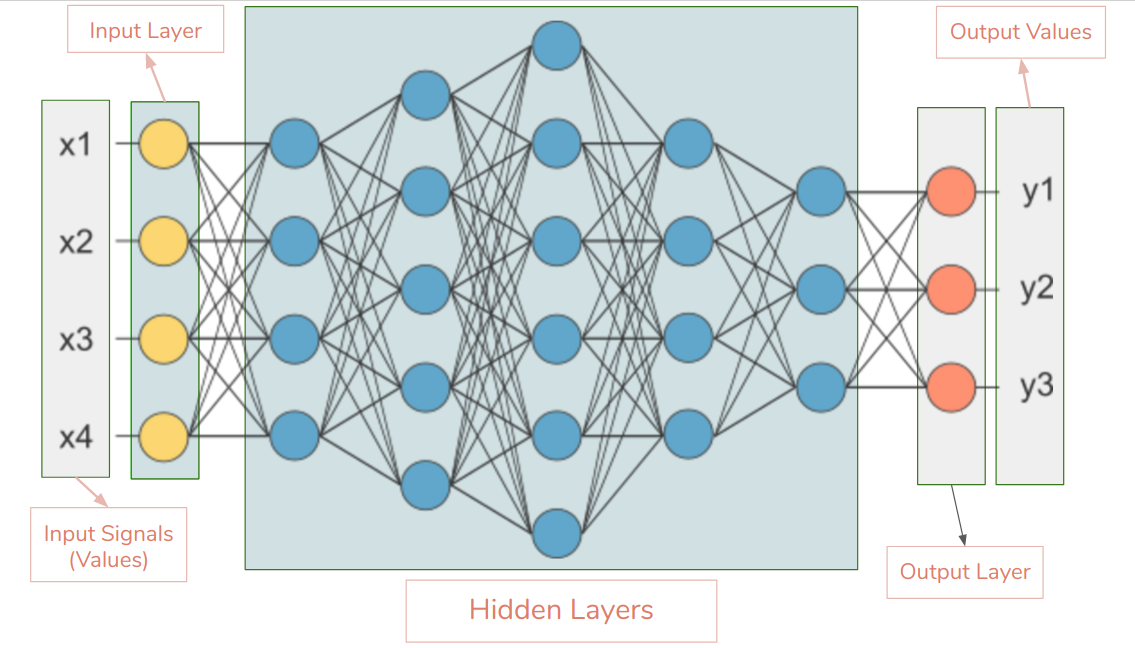

A neural network is a type of machine learning model inspired by the structure of the human brain. Just as the brain contains billions of neurons connected together, a neural network consists of many artificial neurons arranged in layers. Each neuron receives input, performs a simple calculation, and passes the result to other neurons.

The network usually has three types of layers:

- Input layer – where data enters the network (for example, the pixels of an image).

- Hidden layers – where the real processing happens through mathematical transformations.

- Output layer – which produces the final prediction or decision.

Imagine a simple example: you want a neural network to recognize whether a photo contains a cat or a dog. The input layer receives pixel values from the image, the hidden layers detect features such as edges, colors, and shapes, and the output layer predicts “cat” or “dog.”

How do neural networks learn?

The learning process of a neural network is called training. During training, the network is shown many examples (called training data) along with the correct answers (called labels). It gradually adjusts its internal parameters to improve its predictions.

Here’s how this happens step by step:

- Forward pass – The input data is fed through the network. Each neuron applies mathematical operations (like weighted sums and activation functions) to produce an output.

- Prediction – The network outputs a guess. For example, it might predict “dog” when shown an image of a cat.

- Loss calculation – The model measures how far off its prediction is using a loss function. If the prediction is wrong, the loss is high; if it’s right, the loss is low.

- Backpropagation – The network works backward, calculating how much each neuron contributed to the error. It then adjusts its weights slightly in the right direction to reduce the loss.

- Repetition – This process is repeated thousands or even millions of times with many examples until the network’s predictions become accurate.

This process of adjusting weights using feedback is what allows the network to “learn” patterns from data — not through hard-coded rules, but through experience.

A simple example

Let’s imagine a network trained to recognize handwritten digits (like those from 0 to 9). At first, it makes random guesses. But as it processes thousands of labeled images, it starts to learn which pixel patterns correspond to each number.

For example:

- When it sees a circular shape, it might associate that with the number 0.

- When it detects a vertical line with a short curve, it might associate that with the number 1.

- Over time, it refines these associations until it can accurately recognize digits it has never seen before.

This ability to generalize — to make correct predictions on new data — is one of the most powerful features of neural networks.

Why neural networks matter

Neural networks have revolutionized nearly every field that relies on pattern recognition or prediction. They power speech recognition in smartphones, language translation, medical image analysis, and self-driving cars. Their flexibility allows them to handle images, text, audio, and even complex sensor data.

However, they also have limitations. Neural networks require large amounts of data and computing power to train, and they can sometimes make mistakes that are hard to interpret. This has led to ongoing research in explainable AI — an effort to understand why a neural network makes certain decisions.

In summary

Neural networks learn by adjusting their internal connections to minimize errors as they process examples. Through repeated practice, they discover patterns in data and become remarkably good at performing tasks that once required human intelligence.

What makes them so powerful is that they don’t need explicit rules — they learn from experience, just as humans do. And as research continues, neural networks will only become more capable, more interpretable, and more deeply integrated into our everyday lives.